I’m a scientist. Or at least that’s how we describe our job title at the lab. I’m a scientist studying psychological science. Sometimes we do what we consider important work, like using our expertise for depression detection and prevention [1], but sometimes we do stuff because it’s cool; things that are probably unpublishable, but I keep finding myself wanting to talk about them, because, well, it’s cool.

One of those things was trying to answer a rather silly question: can one’s profile picture tell us something about their personality? lay psychology would predict that they way people choose to portray themselves will be affected by their personality. Turns out this question has already been addressed [2] (tl;dr: to some degree, yes). But I still wanted to give it a shot, not specifically from the personality perspective but re-framing the question as what type of language would be associated with emotion expression on profile pictures?

Plan outline

- Pool some data from our twitter database (we scrape twitter for research purposes)

- Analyze text using LIWC dictionaries [3]

- Analyze affective facial expression thru Google Vision

- Correlate

Pool Data from Twitter + LIWC Analysis

As mentioned before, we regularly pool data from the Twitter API to study the effects of all sort of events on language.

I’ve taken about 9 days of twitter data. For privacy issues and laziness on my behalf, I won’t show how this was done, but rest assured I’ve analyzed individuals with at least 10 tweets and aggregated by user id; wound up with 20,528 observations. LIWC is a dictionary-based software that counts the % of a certain dictionary use in the text. It has over 70 dictionaries that provide linguistic (i.e. personal pronouns) and psychologically-relevant features (i.e., cognitive processes). For example, if I bring LIWC the sentence i am sad, I’ll get back 33.33 (i.e., 1/3 of the sentence) on sadness, but also 33.33 on personal pronouns.

Here’s glimpse showing how the data looks like in the sadness dimension:

| user.id | sad |

|---|---|

| 8982c05a | 1.1475000 |

| 53cf4674 | 0.0000000 |

| e36afb93 | 1.1113333 |

| ccf939d0 | 0.4327273 |

| 7727e5f3 | 0.0000000 |

| d705113b | 0.0000000 |

| bd8d123d | 0.0000000 |

| 7877ced5 | 0.0000000 |

| ddb2d85f | 0.5324359 |

| 524eb065 | 0.0000000 |

Analyze Profile Pic

Next step is to take the twitter profile pic of each individual. I used the ol’ twitteR package to get the profile pic

library(twitteR)

Consumer_Key = 'YourConsumerKey'

Consumer_Secret = 'YourSecretKey'

Access_Token = 'YourAccessToken'

Access_Token_Secret = 'YourAccessTokenSecret'

Setting up the twitter account

setup_twitter_oauth(Consumer_Key,Consumer_Secret,Access_Token,Access_Token_Secret)

#Download Donald Trump's Profile Pic

usee <- getUser("realDonaldTrump")

img <- usee$profileImageUrl

Next, let’s set up the connection with Google Vision using RoogleVision:

devtools::install_github("cloudyr/RoogleVision")

require("RoogleVision")

### Setup Google Vision

options("googleAuthR.client_id" = "YourGoogleClientId.apps.googleusercontent.com")

options("googleAuthR.client_secret" = "YourClientSecret")

options("googleAuthR.scopes.selected" = c("https://www.googleapis.com/auth/cloud-platform"))

googleAuthR::gar_auth()

#This is my own costume function to resize the profile pic and send it for Google Emotion Detection

get_face <- function(img){

img2 <- stringr::str_replace(img, "_normal.jpg", "_400x400.jpg")

a <- getGoogleVisionResponse(img2, feature="FACE_DETECTION")

emo <- c("joy" = a$joyLikelihood, "sorrow" = a$sorrowLikelihood, "anger" = a$angerLikelihood,

"surprise" = a$surpriseLikelihood)

return(emo)

}

We get a vector of 5-scale likelihood from Very Unlikely to Very Likely:

| emotion | likelihood |

|---|---|

| joy | VERY_UNLIKELY |

| sorrow | UNLIKELY |

| anger | VERY_UNLIKELY |

| surprise | VERY_UNLIKELY |

Trump’s profile pic is best described as sorrow, but still with a score of 2/5. SAD!

Correlate

Now, we can go back to our twitter users and analyze their emotion likelihood. Each one gets a number from 1 to 5 based on their rating. Since we’re dealing with an ordinal scale, best to correlate the variables with Spearman’s correlation coefficient.

corrs <- as.data.frame(cor(dt, use = "pairwise.complete.obs", method = "spearman"))

#let's keep only |r| > 0.1

kable(data.frame(vars = names(corrs)[abs(corrs$joy_face_emotion)>0.1], coeff = round(corrs$joy_face_emotion[abs(corrs$joy_face_emotion)>0.1], digits = 2)))

| vars | correlation with joyful facial expression |

|---|---|

| joy_face_emotion | 1.00 |

| suprise_face_emotion | -0.29 |

| anger_LIWC | -0.11 |

| sexual_LIWC | -0.11 |

| swear_LIWC | -0.14 |

Noice! joyful facial expression is negatively correlation with surprise. Not interesting.

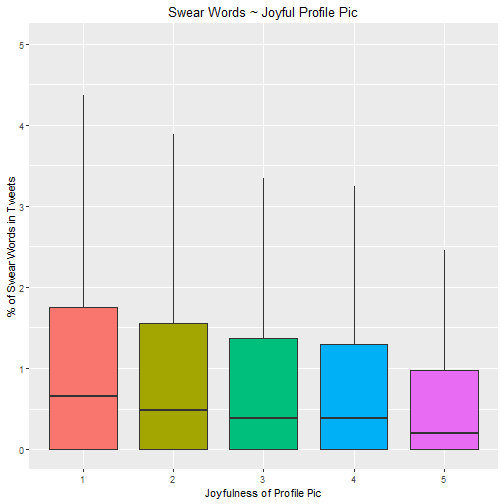

BUT it seems like Joyful profile pics are negatively correlated with anger, sexual and swear words. Which kida makes sense if you think about it.

Let’s look at the boxplot plots for Swear Words:

ggplot(dt, aes(as.factor(joy_face_emotion), swear_LIWC)) +

geom_boxplot(aes(fill = as.factor(joy_face_emotion)), outlier.shape = NA) +

coord_cartesian(ylim = c(0,5)) + theme(plot.title = element_text(hjust = 0.5), legend.position = "none") + labs(x = "Joyfulness of Profile Pic", y = "% of Swear Words in Tweets", title = "Swear Words ~ Joyful Profile Pic")

Sum it up, won’t you?

- I’ve estimated joyful facial expression of twitter users

- I’ve analyzed tweets of those users with a dictionary-based method

- Joyful profile pics are negatively correlated with anger, sexual and swear words.

If you liked this post, give me a shoutout on twitter!

[1]: Simchon, A., & Gilead, M. (2018, June). A Psychologically Informed Approach to CLPsych Shared Task 2018. In Proceedings of the Fifth Workshop on Computational Linguistics and Clinical Psychology: From Keyboard to Clinic (pp. 113-118). Link

[2]: Liu, L., Preotiuc-Pietro, D., Samani, Z. R., Moghaddam, M. E., & Ungar, L. (2016, March). Analyzing personality through social media profile picture choice. In Tenth international AAAI conference on web and social media. Link.

[3]: Pennebaker, J. W., Boyd, R. L., Jordan, K., & Blackburn, K. (2015). The development and psychometric properties of LIWC2015.Link